AXCIOMA Execution Framework

This article describes the features of the AXCIOMA Execution Framework (ExF).

Introduction

The AXCIOMA Execution Framework (ExF) provides developers full concurrency control for component based applications. AXCIOMA ExF is a commercial extension provided by Remedy IT. It can be installed as plugin within a AXCIOMA development environment.

AXCIOMA ExF decouples middleware event generation/reception and component event handling in such a way that the component developer is guaranteed to be safe from threading and code reentrancy issues without losing the ability to fully use multithreaded architectures to scale applications.

AXCIOMA ExF will capture and encapsulate events for any interaction pattern or connector implementation (synchronous requests, asynchronous replies, state and/or data events, or custom connectors) allowing them to be asynchronously scheduled and subsequently handled in a serialized manner.

Background

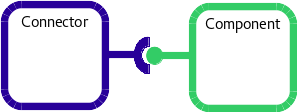

In the default AXCIOMA distribution the connection between connector instances and components will be a direct local calling connection for each port interface resulting in the most optimal execution sequence from event generation/reception to the handling of the event.

This connection scheme is depicted in the Figure 1.

In this scheme the component provides an interface reference of it’s facet executor to the receptacle of the connector. When receiving (or generating) an event the connector will now be able to directly call the component’s executor through the provided reference allowing the most optimal execution sequence.

Potentially however this connection and execution scheme provides the developer with serious concurrency challenges as the component now has no control over the execution context in which it’s code is called as it is the connector code that determines that execution context.

While the currently available default (non-ExF) AXCIOMA connector implementations (CORBA4CCM, AMI4CCM, TT4CCM, DDS4CCM, and PSDD4CCM) will by default run single threaded (in the default single threaded locality runtime environment) this may prove difficult to maintain for future versions of these or other implementations. As AXCIOMA is intended to provide a middleware agnostic development environment explicitly allowing for the introduction of alternate connector implementations it would (repeatedly) provide considerable development challenges to maintain a predictable execution context for component developers without a solution that is independent of the underlying connector implementations. Furthermore, the requirement to keep the event handling single threaded by having only a single thread to receive or generate events would provide a serious constraint diminishing scalability options.

Apart from concurrency issues there is also the risk of reentrancy in certain middleware implementations like TAOX11. It’s thread reuse features are powerful latency boosters but may cause unexpected challenges for component developers.

AXCIOMA ExF provides a solution to these challenges by decoupling the direct local connection between connector and component instance and providing an execution framework offering controlled asynchronous, priority based event handling.

Implementation

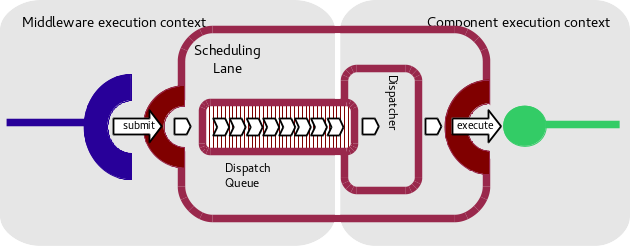

AXCIOMA ExF provides a solution for concurrency challenges by implementing an execution framework that places scheduling lanes between the connector and the component instances as schematically depicted in Figure 2.

The scheduling lane decouples the connector’s execution context from the component’s execution context through a configurable event queue and dispatcher.

With ExF after event reception/generation the connector will submit the event, properly encapsulated, to the scheduling lane configured for the component (!) from where the event is entered into the scheduling queue of the dispatcher. The dispatcher, which executes in a configurable execution context (single or multi threaded), retrieves events from the queue and dispatches these to the connected (to the scheduling lane) component instances sequentially per component instance (i.e. any component instance will only ever get a single event at a time to handle).

This execution sequence is depicted in the following figure.

Although these figures show a completely different, more complex, organization of components and code than with the standard (non-ExF) connections in AXCIOMA this has zero consequences for component developers (except in allowing more simplified code as threadsafety and NRE execution are guaranteed).

All changes in code are either in the, interaction pattern independent, ExF framework components or in the, fully generated, connector implementations. In the end the execution of the component code handling the event will happen in the exact same way as in a setup without ExF using the unaltered component interfaces. Binary (compiled) component artifacts can in fact be deployed in environments utilizing regular or ExF enhanced containers without the need for recompilation.

The deployment plan is also not affected by ExF. The ExF core modules loaded (configured) into the DnCX11 framework handle this additional connection setup automatically. The deployment plan will still only specify a connection between a port of the (ExF capable) connector instance and the component instance.

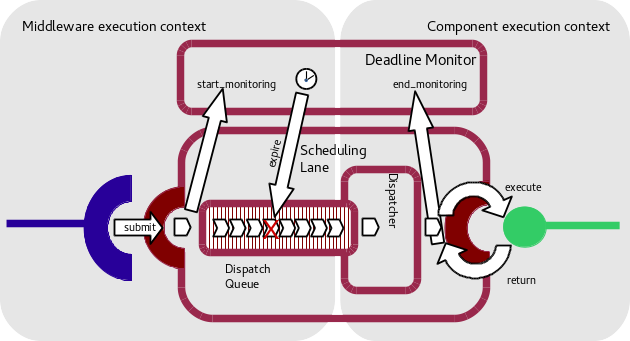

The priorities of each event scheduled for a single component instance, port or event/request id can be configured in the deployment plan. By default all scheduled events will have the same priority defined. In addition the queuing policy of the dispatch queue for a component instance can be configured and set to FIFO (default) or LIFO. Optionally each event scheduled for a single component instance, port or event/request id can additionally be configured with a user defined deadline time and type (NONE, EXPIRE, SOFT, HARD) in the deployment plan. Monitoring support for these deadlines requires a container configured for ExF scheduling as well as ExF deadline monitoring.

When ExF deadline monitoring support is configured and the event has been configured with a deadline type and time the scheduling lane will register the event with the ExF deadline monitor before entering the event into the dispatch queue. After the event has been handled by the component instance the dispatcher will deregister the event from the ExF deadline monitor again.

This process is depicted in Figure 4.

In case the deadline expires in between the moment the event is registered for deadline monitoring and the moment the dispatcher deregisters the event from deadline monitoring, the ExF deadline monitor will log an appropriate (error) message and cancel the event. Canceling will send an appropriate (error) reply as required by the connector implementation and unlock any waiting peers (if applicable).

Canceling an event does not interrupt the component instance if it already started handling the event but it does prevent any results from handling the event to be returned. In case an event was canceled before the component instance started handling the event (either still queued or being dispatched) this will effectively prevent the event from ever being handled ('seen') by the component instance.

Scheduling Lanes

Scheduling lanes accept (encapsulated) middleware events intended for specific component instances and route these to their associated dispatcher through the dispatch queue.

Scheduling lanes can be opened in both exclusive and non-exclusive mode. Exclusive scheduling lanes will accept events for a single component instance (all ports of that component instance) while non-exclusive Scheduling lanes will accept events for multiple component instances.

Non-exclusive lanes can either be non-discriminative (for the default scheduling lane of an ExF enabled container) or discriminative for user defined groups of component instances. These are the 'grouped' scheduling lanes.

Dispatchers

Dispatchers dequeue scheduled events and route these to their targeted component instances.

Dispatchers can operate in various threading contexts depending on their associated Scheduling lane. Exclusive scheduling lanes are always associated with a private dispatcher operating single threaded. Non-exclusive scheduling lanes are associated with a shared dispatcher which can be configured for single threaded (default), static threadpool (fixed thread count) or dynamic threadpool (minimum and maximum threadcount) operation. With multi threaded operation (static or dynamic) the dispatcher will run multiple threads simultaneously dequeuing and routing scheduled events. Each component instance associated with the dispatcher through the non-exclusive scheduling lane will however be guaranteed to only be offered a single event to handle at anyone time (i.e. each thread will always dispatch events for a different component instance as another thread at that time).